THE QUICKIE

This post covers:- how to submit your blog or website to the major search engines (Google, Yahoo, Microsoft, and I've thrown in Ask) to invite them to start visiting your site - just use the forms below

- how to submit your "sitemap" to the search engines (touching on the role of the robots.txt file) to ensure that, if and when they start crawling your blog or web site, they'll index all your webpages comprehensively - use the forms below, and sign up for their various webmaster tools; if your blog is on Blogger you can try submitting several URLs (depending on how many posts you have in your blog) to cover your whole blog: http://BLOGNAME.blogspot.com/feeds/posts/default?start-index=1&max-results=500

and http://BLOGNAME.blogspot.com/feeds/posts/default?start-index=501&max-results=500 etc - how to get your updated web pages re-indexed by the search engines for the changed content - this should happen automatically for Blogger blogs, at least if they're on Blogspot or custom domains.

THE LONG & SLOW

It's been well known for some time that most people navigate to websites via search engine searches, e.g. by typing the name of the site in the search box - even when they know the direct URL or web address.Search engines like Google and Yahoo! send out software critters called bots, robots, web crawlers or spiders to crawl or "spider" webpages, following links from page to page and fetching back what they find for indexing and storage in the search company's vast databases. When someone uses a search engine, they're actually searching its databases, and the search engine decides what results to serve or return to the user (see Google 101: How Google crawls, indexes, and serves the web, which Google recently updated).

So to maximise visitor traffic to your site you need as a minimum to get the search engines to:

- start indexing your website or blog for searching by their search engine users, and then

- index it as completely and accurately as possible, by giving them the full structure or map of your site (a sitemap), and keep that index updated.

This post is an introductory howto which focuses mainly on 2 (getting your site indexed comprehensively), but I'll touch first on 1, i.e. search engine submission (submitting your blog to the search engines to ask them to index your posts). Trying to get your posts ranked higher in search engine results (the serve/return aspect and search engine optimisation or SEO) is yet another matter.

I'll only cover Google, Yahoo!, Microsoft Live Search (Live Search is the successor to MSN Search) and Ask.com. Why? Because they're the 3 or 4 key search engines in the English-speaking world, in my view: certainly, the vast majority of visitors to ACE come from one or other of them (94 or 95% Google, 3 or 4% Yahoo!). I have a negligible number of visitors from blogosphere search engines like Technorati, whether via normal searching or tags, and it's the same with Google BlogSearch, so I'm not covering Technorati or BlogSearch in this post.

Realistically, if you want to maximize or just increase traffic to your site or blog, you need to try to get indexed by and then to increase your rankings with the "normal" search engines, because virtually everyone still uses the "normal" search engines as their first port of call, not the specialised blogosphere search engines. Furthermore, the same few big search engines dominate. Independent research also bears out my own experience.1

So if you want to get more traffic to your website, it's a good idea to get indexed, and indexed comprehensively, by at least the big 3 search engines: Google, Yahoo and Microsoft.

Submitting your site to Google, Yahoo, Microsoft and Ask.com

No guarantees..

Remember: submitting your site to the search engines just gets their attention. It doesn't guarantee they'll actually decide to come and visit you. It's the equivalent of "Yoohoo, big boy, come up & see me sometime!" It doesn't mean that they'll necessarily succumb to your blandishments.In other words, submitting or adding your site to Google etc is better than not doing so, but it's not necessarily enough to persuade them to start indexing your site in the first place or sending their bots round to your site. It takes good links in to your blog to do that, normally.

Below I cover how to try to get your site indexed by the main search engines so that it'll be searchable by users of search engine sites, but the operative word is try.

What are bots or robots and what are search engine indexes?

Bots, robots, web crawlers or spiders are software agents regularly sent out across the Web by search engines to "crawl" or "spider" webpages, sucking in their content, following links from page to page, and bringing it all back home to be incorporated into the vast indexes of content maintained by the search engines in their databases.Their databases are what you search when you enter a query on Google, Ask.com etc.

Bots can even have names ("user agent identifiers"), e.g. the Googlebot (more on Googlebot) or Yahoo's Slurp. (See the database of bots at robotstxt.org, which may however not always be fully up to date or comprehensive.)

And of course it's not like each search engine service has only one bot - they have armies of the critters ceaselessly spidering the Web.

Also note that not everything retrieved by a bot gets into the search engine's index straightaway, there is usually a time lag between that and the index getting updated with all the info retrieved by all their bots.

How to get your site indexed by the search engines

So how do you get the bots to start stopping by? Most importantly, by getting links to your site from sites they already know and trust - the higher "ranking" the site in their eyes, the better. The search engines are fussy, they set most store by "recommendations" or "references" in the form of links to your site from sites they already deem authoritative.How you get those first few crucial links is up to you - flatter, beg, threaten, blag, sell yourself... I'll leave that, and how to improve your search engine rankings once you've got them to start indexing you, to the many search engine optimisation (SEO) experts out there (and see my 6 SEO sayings post), but normally shrinking violeting just won't do it.

Return visits are go!

The good news is, once you manage to seduce the search engine bots into starting to visit your site, they're normally creatures of habit and will then usually keep on coming by regularly thereafter to slurp up the tasty new things you have for them on your site or blog.How to submit your site to Google, Yahoo!, Microsoft Live Search andAsk.com to request them to crawl your site for their search indexes

- Google: Add your top-level (root) URL to Google (e.g. in the case of ACE it would be http://www.consumingexperience.com without a final slash or anything after the ".com") to request the Googlebot to crawl your site

- Yahoo: Submit your website or webpage URL or site feed URL to Yahoo after you register free with Yahoo and have signed in (I won't cover paid submissions). Again this is to request Yahoo's Slurp to visit your site, but it may not actually do so.. (More on Yahoo's Site Explorer.)

- Microsoft: Submit your site to Microsoft Live Search to request the MSNBot to crawl your site.

- Ask.com: You need to submit your sitemap (covered below) to submit your site to Ask.com.

Sitemaps generally and how to use them

Now once the main search engines have started indexing your site, how do you make sure they do that comprehensively?By feeding them a sitemap.2 Sitemaps help make your site more search engine-friendly by improving how the search engines crawl and index your site, and I've been blogging about them since they were first introduced.

What's a sitemap, and where can I get further information on sitemaps?

A sitemap is a document in XML file format, usually stored on your own website, which sets out the structure of your site by listing, in a standardised way which the search engines' bots will recognise, the URLs of the webpages on your site that you want the bots to crawl.The detailed sitemaps protocol can be found on Sitemaps.org (the sitemap can also optionally give the bots what's known as "metadata", i.e. further info such as the last time a web page was updated).

See also the Sitemaps FAQs, and Google's help pages on sitemaps.

Where should or can a sitemap be located or uploaded to?

A sitemap should normally be stored in the root, i.e. top level, directory of your site's Web server (see the Sitemaps protocol) e.g. http://example.com/sitemap.xml.Important note (my emphasis):

Normally, "...all URLs listed in the Sitemap must use the same protocol (http, in this example) and reside on the same host as the Sitemap. For instance, if the Sitemap is located at http://www.example.com/sitemap.xml, it can't include [i.e. list] URLs from http://subdomain.example.com." (from the Sitemap file location section of the official Sitemaps protocol).

What does that mean? Well, suppose your site is at http://www.example.com and you want to submit a sitemap listing the URLs of webpages from http://www.example.com which you'd like the search engines to crawl; pages like:

http://www.example.com/mypage1.html

http://www.example.com/mypage2.html, etc.

In that example, the search engines will not generally accept your sitemap submission unless your sitemap file is located at http://www.example.com/whateveryoursitemapnameis.xml and if you try submitting it you'll get an error message - generally, if the URLs you're trying to include are at a higher level or different sub-domain or domain, tough luck.

In other words, they will not generally accept a sitemap listing URLs from http://www.example.com (e.g. http://www.example.com/mypage1.html, http://www.example.com/mypage2.html, etc) if the sitemap is located at:

- http://example.com/sitemap.xml - because it doesn't have the initial "www"

- http://another.example.com/sitemap.xml - because "another" is a different subdomain from "www", or

- http://www.someotherdomain.com/sitemap.xml or indeed http://someotherdomain.com/sitemap.xml - because someotherdomain.com is a totally different domain.

And now, via a different method I'll come to below, you can also submit a cross-site, cross-host or cross-domain sitemap which Yahoo and Microsoft will also accept.

How do you create a sitemap?

I won't go into the ins and outs of constructing a sitemap now, there are lots of other resources for that like Google's Sitemap Generator and third party tools, as my main focus is on blogs, where sitemap generator tools aren't really relevant - as you'll see below.Sitemaps for blogs (and other sites with feeds)

This section is about sitemaps for blogs particularly those using Blogger, but much of what I say will also apply to other sites that put out a site feed.How do you create and submit a sitemap for a blog? As mentioned above, normally your sitemap must be at the root level of your domain before the search engines will accept it.

But one big issue with many blogs is that, unless you completely control your website (e.g. you have a self-hosted Wordpress blog or use Blogger on your own servers via FTP), you can't just upload any ol' file you like to any ol' folder you like on the server which hosts your blog's underlying files.

If you have free hosting for your blog, e.g. from Google via Blogger (typically on blogspot.com), you're stuck with what the host will let you do in terms of uploading. Which isn't much. They usually limit:

- what types of files you can upload to their servers (e.g. file formats allowed for images on Blogger don't include .ico files for favicons, which to me makes little sense), and

- where you can store the uploaded files - they (the host), not you, decide all that (e.g. video uploads are stored on Google Video, not the Blogspot servers).

But all is not lost. With a blog you already have a ready-made sitemap of sorts: your feed.

How to submit sitemaps for blogs?: your feed is your sitemap

As most bloggers know, your site feed is automatically created by your blogging software and reflects your latest X posts (X is 25 with Blogger, by default), as long as you've turned your feed on i.e. enabled it. (See my introductory tutorial guides on What are feeds (including Atom vs RSS); How to publish and publicise a feed, for bloggers; How to use Feedburner; Quick start guide to using feeds with Feedburner for the impatient; and Podcasting - with a quickstart on feeds and Feedburner, and a guide to Blogger feed URLs.)You can in fact submit a sitemap by submitting your feed URL: see how to submit a sitemap to Google for Blogger blogs (longer version), and you can even "verify" your Blogger sitemap to get more statistical information about how the Googlebot crawls your blog.

Now, your last 25 posts is not your complete blog, that is true. A sitemap should ideally map out all your blog posts, not just the last 25 or so. But remember, bots faithfully follow links. The vast majority of blogs are set up to have, in their sidebar, an archives section. This has links to archive pages for the entire blog, each of which either links to or contains the text of all individual posts for the archive period (week or month etc), or indeed both. And many blog pages have links to the next and previous posts, while Blogger blogs have links to the 10 most recent posts.

So, even starting from just a single blog post webpage, whether it's the home page or an individual item page / post page, a bot should be able to index the entire blog. (Also, of course, blogs often have links to "Previous post", "Next post", last 10 posts before the current one etc.). That's why my pal and sometime pardner Kirk always says there's not much point in putting out a sitemap for blogs.

What feed URL should you submit?

Because technically the feed, as with other sitemaps, has to be located at the highest level directory of the site you want the search engines to crawl, you shouldn't try to submit a Feedburner feed as your sitemap, as it won't be recognised; you need to submit your original source site feed.For Blogger blogs you'll recall (see this post on Blogger feed URLs) that your blog's original feed URL is generally:

http://BLOGNAME.blogspot.com/feeds/posts/default

- and generally that feed contains your last 25 posts.

For sitemap submission purposes, if http://BLOGNAME.blogspot.com/feeds/posts/default doesn't work, you can alternatively use: http://yourblogname.com/atom.xml or http://yourblogname.com/rss.xml.

Now here's a trick - if you use Blogger you can in fact submit your entire blog, with all your posts (not just your most recent 25), to the search engines. This is done by using a combination of the max-results and start-index parameters (see post on Blogger feed URLs), and knowing that - for now, at least - Blogger allows you a maximum max-results of 500 posts.

Let's say that you have 600 posts in your blog. You would make 2 separate submissions to each of the search engines, of 2 different URLs, to catch all your posts:

http://BLOGNAME.blogspot.com/feeds/posts/default?start-index=1&max-results=500

http://BLOGNAME.blogspot.com/feeds/posts/default?start-index=501&max-results=500

The first URL produces a "sitemap" of the 500 most recent posts in your blog; the second URL produces a sitemap starting with the 501st most recent post, and all the ones before it up to 500 (if you have 600 total you don't need &max-results=500 at all, in fact; but you might if you had over 1000 posts). And so on. If in future Blogger switch the max-results back to 100 or some other number, just change the max-results figure to match, and submit more URLs instead - you can check by viewing the feed URL in Firefox and seeing if it maxes out at 100 (or whatever) even though you've used =500 for max-results. (For FTP blogs it's similar but you'll need to use your blog ID instead of main URL, see my post on Blogger feed URLs.)

For WordPress blogs - how to find the feed locations. I don't know if you can do similar clever things with query parameters in WordPress so as to submit a full sitemap.

How to submit a sitemap - use these ping forms

To submit a sitemap, assuming it's already created and uploaded and you know its URL (which you will for a blog site feed), you just need to use HTTP request (ping), or submit the URL of the sitemap via the relevant search engine sitemap submission's page (see Sitemaps.org on how to submit, and the summary of ping links at Wikipedia).So I've included some forms below to make it easier for you to submit your sitemap to various search engines. Don't forget to include the "http://" or it won't work.

How to submit a sitemap to Google

- Google prefer that the very first time you submit your sitemap to them, you do so via Google Webmaster Tools (it's free to create an account or you can sign in with an existing Google Account). This is a bit cumbersome but you can then get stats and other info about how they've crawled your site (and how they've processed your sitemap) by logging in to your account in future:

- Get a Google Account if you haven't already got one (if you have Gmail, you can use the same sign in details for your Google Account)

- Login with your Google Account to Google Webmaster Tools (GWT).

- Add your site / blog and then add your sitemap (more info) - if you're on Blogger see below for the best URL to use.

- Then you can resubmit an updated sitemap after that, including via the form below.

- While Google prefer that you register for a Google Webmaster Tools account, the easiest way to submit a sitemap is just to enter your sitemap URL below (including the initial http://) and hit Submit. Then bookmark the resulting page (i.e. save to your browser Favorites) and if in future you want to re-submit your sitemap, just go back to the bookmark (opens in a new window):

- Note: this "HTTP request" or "ping" method seems to work for URLs which are rejected when trying the method below (e.g. because they're in the wrong location - wrong domain, as explained above), but Google may well ignore the ping unless you've previously submitted your URL as mentioned below, and who knows whether the sitemap will be accepted for correct domain when they get to processing it? So it's probably better to use the method below first, unless you can't get it to work.

How to submit a sitemap to Yahoo!

- Enter your sitemap URL below (including the http://) and hit Submit. Then bookmark the resulting page (i.e. save to your browser Favorites) and if in future you want to re-submit your sitemap, just go back to the bookmark (opens in a new window):

- Note: as with Google, this "HTTP request" or "ping" method seems to work for URLs which are rejected when trying the method below (e.g. because they're in the "wrong" domain), but again who knows whether the sitemap will be accepted for the correct domain when they get to processing it?

- Note: as with Google, this "HTTP request" or "ping" method seems to work for URLs which are rejected when trying the method below (e.g. because they're in the "wrong" domain), but again who knows whether the sitemap will be accepted for the correct domain when they get to processing it?

- Alternatively, more cumbersome but you can then get stats about how they've crawled your site by logging in to your account in future:

- Get a Yahoo ID (if you have Yahoo Mail you already have one)

- Login with your Yahoo ID to Yahoo! Site Explorer and (add your sitemap or feed-as-sitemap) (more info).

How to submit a sitemap to Microsoft Live?

Microsoft were later to the Sitemaps party but Live Search does now support sitemaps.- Enter your sitemap URL below (including the http://) and hit Submit. Then bookmark the resulting page (i.e. save to your browser Favorites) and if in future you want to re-submit your sitemap, just go back to the bookmark (opens in a new window):

Microsoft also have a Webmaster site where you can submit your sitemap.

How to submit a sitemap to Ask.com

- Enter your sitemap URL below (including the http://) and hit Submit, again this method uses "HTTP request" or "ping"; bookmark the resulting page and go back to the bookmark to re-submit in future (opens in a new window):

- NB for Blogger feeds don't use /posts/default format, you'll have to use http://yourblog.blogspot.com/atom.xml

(more info). - NB for Blogger feeds don't use /posts/default format, you'll have to use http://yourblog.blogspot.com/atom.xml

- Note: for some reason Ask.com will not currently accept sitemaps which don't point to a specific file like .xml or .php etc - so it won't accept feeds like Wordpress blog feeds in the format http://example.com/?feed=rss, or Blogger feeds in the format http://yourblogname.com/feeds/posts/default or indeed http://yourblogname.com/feeds/posts/default?alt=atom (but e.g. http://yourblogname.com/atom.xml does work with Ask.com).

Updates to your blog / site - how to ping the search engines

Let's assume that the search engine crawlers are now regularly visiting your site. But you don't know at what intervals or how frequently.What if you update your blog or site by adding new content or editing some existing content? You'll want the search engines to know all about it ASAP so that they can come and slurp up your shiny new or improved content, so that their indexes are as up to date as possible. It's annoying and offputting for visitors to come to your site via a search only to find that the content they were looking for isn't there, or is totally different (they might still be able to get to what they want via the search engine's cache, but that's a different matter).

So, how do you get the search engine bots to visit you ASAP after an update, and pick up your new or edited content? By what's called "pinging" them. How you ping a search engine depends on the search engine, not surprisingly (see e.g. for Yahoo).

But guess what? To ping a search engine, all you have to do is re-submit your sitemap to it. I've already provided the ping forms above, so once you've pinged and saved the bookmark/favorite you just need to click that bookmark. Alternatively, you can also login to a search engine's site management page and use the Resubmit (or the like) buttons there.

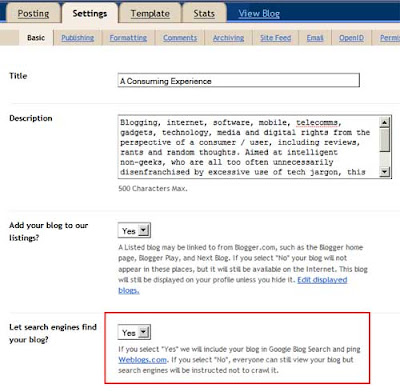

Also, blogging platforms like Blogger will automatically ping the search engines for you when you update your blog - in Blogger this should be activated by default (Dashboard > Settings > Basic, under "Let search engines find your blog?" if it's set to "Yes" then Blogger will ping Weblogs.com, a free ping server, when you update your blog, so that search engines around the Net which get info from Weblogs.com will know to come and check out your updated pages). If your blogging software doesn't do that, you can always use Feedburner's PingShot (which I've previously blogged - see my beginners' detailed introduction to Feedburner).

How do you submit just changed or updated pages?

Sites with zillions of URLs can in fact have more than one sitemap (with an overall sitemap index file) and just submit the sitemaps for recently changed URLs. I'm not going to go into that here, sites that big will have webmasters who'll know a lot more about all this than I do.Now recall that with blogs the easiest way to provide a sitemap, if you can't upload your own sitemap file into the right directory, is to use your feed as the sitemap.

What if you've updated some old posts on your blog, and you want the search engines to crawl the edited content of the old posts?

Aha. Well if you're on Blogger, there is a way to submit a sitemap of just your most recently-updated posts to the search engines.

This is because Blogger automatically produces a special feed that contains just your most recently-updated posts - not your most recently-published ones, but recently-updated ones. So even an old post you just updated will be in that feed. See my post on Blogger feed URLs (unofficial Blogger feeds FAQ) for more info, but essentially the URL you should use for your sitemap in this situation is

http://YOURBLOGNAME.blogspot.com/feeds/posts/default?orderby=updated (changing it to your blog's URL of course)

- for instance for ACE it would be http://www.consumingexperience.com/feeds/posts/default?orderby=updated

The "orderby=updated" ensures that the feed will contain the most recently-updated posts, even if they were first published some time ago. If I wanted it to contain just the 10 most recently-updated posts, irrespective of how many posts are normally in my main feed, I'd use:

http://www.consumingexperience.com/feeds/posts/default?orderby=updated&max-results=10

Furthermore, Blogger automatically specifies the "updated" feed as your sitemap in your robots.txt file. Which I'll now explain.

Sitemaps auto-discovery via robots.txt files

It's a bit of a pain to have to keep submitting your sitemap to the various search engines every time your site is updated, though obviously it helps if your blogging platform automatically pings Weblogs.com.Also, the search engine bots don't have an easy time figuring out where your sitemap is located (assuming they're indexing your content in the first place, of course). Remember that sitemaps don't have to be feeds - feeds are just one type of XML file which are acceptable as sitemaps. People can build their own detailed sitemap files, name them anything (there's no standardisation on what to name sitemap files), and store them anywhere as long as they're at their domain's root level.

If you actively submit your sitemap to a search engine manually or through an automatic pinging service, that's fine. But if you don't, how's a poor hardworking lil bot, diligently doing its regular crawl, going to work out exactly what your sitemap file is called and where it lives?

So arose a bright idea - why not use the robots.txt file for sitemap autodiscovery?

What are robots.txt files?

Robots.txt files are simple text files found behind the scenes on virtually all websites.Now a robots.txt file was initially designed to do the opposite of a sitemap - basically, to exclude sites from being indexed, by telling search engine bots which bits of a site not to index, or rather asking them not to index those bits. (There are other ways (i.e. meta tags) to do that, but I won't discuss them here.)

Legit bots are polite and will abide by the "no entry" signs because it's good form by internet standards - but not because they can be made to and they'll be torn apart bit by bit if they don't. (It's important to note that using robots.txt is no substitute for proper security to protect any truly sensitive content - the robots.txt file really contains requests rather than orders, and won't be effective to stop any pushy person or bot who decides to ignore it. Whether anyone can sue for trying to get round robots.txt files is a different matter yet again.)

Using robots.txt it's possible to block specific (law-abiding) bots by name ("user-agent"), or some or all of them; and to block crawlers by sub-directory (e.g. everything in subfolder X of folder Y is off limits), or even just one webpage - for more details see e.g. Wikipedia, the robots.txt FAQ, Google's summary, "using robots.txt files" and Google's help. Google have even provided a robots.txt generator tool, but it won't work for Blogger blogs, see below.

Where's the robots.txt file?

A robots.txt file, like a sitemap, has to be located at the root of the domain e.g. http://yoursitename.com/robots.txt. (It can't be in a sub-directory e.g. http://yoursitename.com/somefolder/robots.txt, because the system wasn't designed that way. Well you could put it in a subfolder, but search engines will only look for it at root level, so they won't find it.)Remember that again, as for sitemaps, different subdomains are technically separate - so they could have different robots.txt files, e.g. http://yoursitename.com/robots.txt is not in the same domain as http://www.yoursitename.com/robots.txt.

However, unlike sitemap files, robots.txt files do have a standard name - robots.txt, wouldja believe. So it's dead easy to find the robots.txt file for a particular domain or sub-domain.

Using robots.txt files for sitemap auto-discovery

Given the ubiquity of robots.txt files, the thought was, why not use them for sitemaps too? More accurately, why not use them to point to where the sitemap is?That was the solution agreed by the main search engines Google, Yahoo too, Microsoft and Ask.com for sitemap auto-discovery (Wikipedia): specify the location of your sitemap (or sitemap index if you have more than one sitemap) in your robots.txt file, and bots can auto-discover the location by reading the robots.txt file, which they can easily find on any site. (Since then, those search engines have also agreed on standardisation of robots exclusion protocol directives, big pat on the head chappies!)

Now how do you use a robots.txt file for your sitemap? In the robots.txt file for yoursitename.com (which will be located at http://yoursitename.com/robots.txt), just stick in an extra line like this (including the http etc):

sitemap: http://yoursitename.com/yoursitemapfile.xml

And then, simple, the bots will know that the sitemap for yoursitename.com can be found at the URL stated, and the main search engines have agreed (including Yahoo and Microsoft) that they'll accept it as a valid sitemap even if it's in a different domain and even if you've not verified your site. So, to manage cross host sitemap submission or cross site sitemap submission,

Adding a "sitemap" line in the robots.txt file to indicate where the sitemap file is located has two benefits. The search engines regularly download robots.txt files from the sites they crawl (e.g. Google re-downloads a site's robots.txt file about once a day). So they'll know exactly know where the sitemap file for a site is. They'll also regularly fetch that sitemap file automatically, and therefore know to crawl updated URLs as indicated in that file.

Can you create or edit your robots.txt file to add the sitemap location?

Now bloggers will have been thinking, so what if you can now use robots.txt to tell most of the search engines where your sitemap is?First, unless you host your blog files on your own servers (e.g. self-hosted WordPress blogs or FTP Blogger blogs or Movable Type blogs), you won't be able to upload a robots.txt file to the root of your domain. For example users of Blogger custom domain or Blogspot.com blogs can't upload a robots.txt file.

Second, is there any point in doing that even if you could, given that for most blogs the sitemap is just the site feed, which the search engines will get to regularly anyway?

Well, there is, at least for Blogger users.

Blogger's robots.txt files

For the first issue, Blogger creates and uploads a robots.txt file automatically for Blogspot.com and custom domain users.For instance, my blog's robots.txt file is at http://www.consumingexperience.com/robots.txt (and as I have a custom domain, http://consumingexperience.blogspot.com/robots.txt also redirects to http://www.consumingexperience.com/robots.txt - which is as it should be). You can check the contents of your own just by going to http://YOURBLOGNAME.blogspot.com/robots.txt

What Blogger have put in that robots.txt file also helps answer the second issue. Here's the contents of my blog's robots.txt file:

User-agent: Mediapartners-GoogleThe sitemap line is there, as it should be. And it gives as my sitemap the URL of the Blogger feed which lists the posts which have been most recently updated in my blog. Not the default feed URL which lists the most recently published new posts, but the feed which shows my most recently updated posts. Even though the location of that "updated" feed doesn't follow the rule about having to be located in the root of the domain concerned, that's fine because it's listed in my blog's robots.txt file, so the major search engines will accept it, and will regularly spider my updated posts, so even if I've just changed an old post, the changed contents of that old post will be properly re-indexed. And so too will yours - if you're on Blogger, at least.

Disallow:

User-agent: *

Disallow: /search

Sitemap: http://www.consumingexperience.com/feeds/posts/default?orderby=updated

More info

- See the Sitemaps protocol and FAQ

- Robotstxt site and Google intro

- Controlling how search engines access and index your website; The Robots Exclusion Protocol, more on REP and still more on REP

- Google's handy table of Robot Exclusion Protocol directives; dealing with "/2008/07/blocked_by_robots.txt" problems

- Googlebot, meta tags & robots.txt and more on the Googlebot

Notes:

1. Of the top 50 web properties in the USA in May 2008, Google Sites ranked as no. 1 followed by Yahoo! Sites and then Microsoft Sites and AOL (comScore Media Matrix); and Nielsen Online told a similar story with the top 3 of the top 10 US Web sites belonging to Google, Yahoo and Microsoft. In the UK, during 2007 Google was the most popular website by average monthly UK unique audience as well as the most visited by average monthly UK sessions (Nielsen). Of all searches conducted in the Asia Pacific in April 2008, 39.1% were on Google Sites , 24% on Yahoo! Sites (comScore Asia-Pacific search rankings for April 2008); in the USA in May 2008 61.8% of searches were on Google Sites, 20.6% Yahoo Sites and 8.5% Microsoft with AOL and Ask having 4.5% each (comScore May 2008 U.S. search engine rankings), while in the UK in April 2008 Google Sites dominated with 74.2% of all searches, with the second, eBay, at only 6 %, followed by Yahoo! Sites (4.3%) and Microsoft Sites (3.4%) (comScore April 2008 UK search rankings).

2. A short history of Sitemaps. Google introduced sitemaps in mid-2005 with the aim of "keeping Google informed about all of your new web pages or updates, and increasing the coverage of your web pages in the Google index", including adding submission of mobile sitemaps, gradually improving its informational aspects and features for webmasters in Google Webmaster Tools (a broader service which replaced "Sitemaps"), and even supporting multiple sitemaps in the same directory. Google rolled Sitemaps out for sites crawled for Google News in November 2006 and Google Maps in January 2007.

In November 2006 Google got Microsoft and Yahoo to agree to support the Sitemaps protocol as an industry standard and they launched Sitemaps.org. In April 2007 Ask.com also decided to support the Sitemaps protocol.

Another excellent Sitemaps-related innovation in 2007, at Yahoo!'s instigation this time, was sitemaps auto-discovery. Third party sitemap tools have also proliferated. There have been teething issues, but it all seems to be working now. There are even video sitemaps, sitemaps for source code and sitemaps for Google's custom search engines, but that's another matter...

Tags:

2 comments:

Enjoyed the read. I just last week started a new web site and wanted fast indexing, so I bloged on digg and got google indexed in just a few hours!

I hope it helps someone else to get listed quick! Good luck.:)

www.sunsetteethwhitening.co.uk

I am undecided as to whether site maps help or hinder a website. I am conscious that on the one hand, correctly inserting a site map via GWebmaster Tools, means confirming a Google account and WM Tools account. And that this then needs to be verified (read: G pushing importance of getting a site map as a strategy towards aquiring a certified mailing list of website owners for future marketing strategies – and there is nothing wrong with that, for those not specialized in this field)

Equally I’ve seen sites perform just as well that have absolutely nothing to do with the specialist services offered by the search engine, so I’m not still undecided.

If I see a site is not getting indexed well, I’ll add a site map (and by that I mean XML sitemap loaded into Google webmaster tools, in all cases a site map is featured on a clients website)

Post a Comment